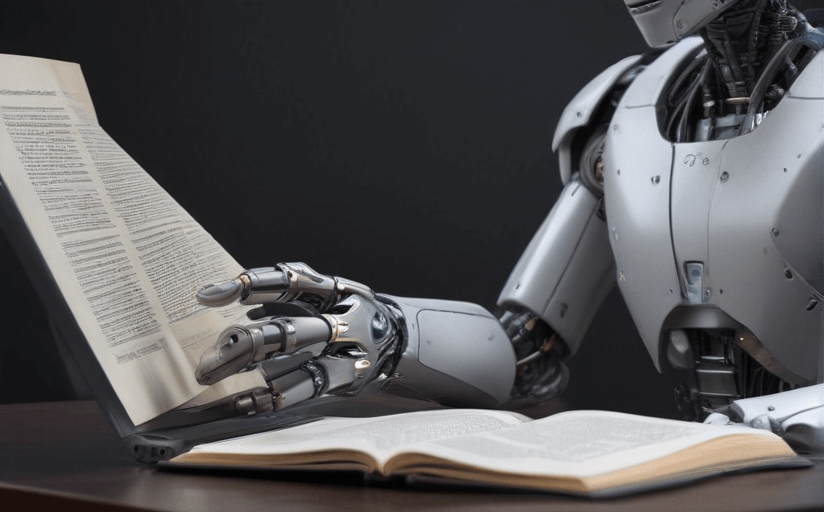

Explore the Ethical Implications of Artificial Intelligence and Automation

In the contemporary realm of technology, artificial intelligence (AI) and automation are two rapidly evolving phenomena that are bringing about significant changes to various sectors globally. Reflecting the dynamism and sophistication of human cognition, AI and automation have introduced us to an era of machine learning and automated systems. However, as they become increasingly prevalent, these technologies are raising important questions concerning their ethical implications.

Ethical Challenges and Moral Dimensions of AI and Automation

One of the significant ethical implications of AI and automation is the prevalence of biases in decision-making algorithms. As these technologies often learn from human-generated data, they may inherently acquire and propagate biases. For example, the hiring tool developed by Amazon reportedly discriminated against women, indicating the bias absorbed from historical data.

Additionally, privacy concerns represent another ethical dilemma. AI systems extensively use personal data for optimization. Without comprehensive policies governing data collection, usage, and protection, user privacy becomes susceptible to compromise.

Perhaps the most controversial ethical issue is job displacement. As AI and automation become more capable and cost-effective, they are increasingly replacing human labor in several industries.

Finally, accountability issues arise from these technologies as identifying the source of errors or malfunctions in AI systems can be a challenging task.

Influence on Development and Application of AI

These ethical implications are considerably influencing the development and use of AI and automation. Public discourse and policy are starting to focus more on promoting bias-free, transparent AI applications, respecting privacy rights, addressing job disruption, and establishing accountability mechanisms.

Measures to Ensure Ethical Use

To ensure the ethical use of AI and automation, it is critical to have robust regulatory frameworks, ethical codes, and guideline principles propagated by tech companies, scholars, and policymakers. Also, inputs from cross-disciplinary teams - ethicists, social scientists, and technologists - may safeguard the development of empathy, fairness, and inclusivity within AI systems.

Real-life Instances and Responses

Companies like Google have faced backlash for ethical violations related to AI. Debates about its dubious Project Maven and Dragonfly ventures pushed the company to formulate AI Principles as a response to public pressure. Moreover, scholarly endeavors like the Montreal Declaration or publications by institutes like the Future Society are shaping the discourse around AI ethics. Policymakers are also gradually realizing the need for effective regulations hence, GDPR by the European Union and the Personal Data Protection Bill by India.

Comments

Leave a Comment